Layered Space II

August 18, 2014Studio - Part II

{The following continues the investigation into the liminal boundaries of material and digital interactions}.

Hacking - a subversive method of co-creation, starting and ending in a non-proprietary code of conduct to achieve results in an efficient means of communication. The medium, JavaScript, is cool by nature, requiring intensive concentration by the reader / participant, in order to comprehend and translate the outcome and effect. The eloquence of the language is dependent upon the programmer, so in this respect, style plays a large part of its legibility legacy. Take the following perspective from Marijn Haverbeke’s Eloquent JavaScript: A Modern Introduction to Programming:

“A program is a building of thought. It is costless to build, it is weightless, and it grows easily under our typing hands. If we are not careful, its size and complexity will grow out of control, confusing even the person who created it. This is the main problem of programming: keeping programs under control. When a program works, it is beautiful. The art of programming is the skill of controlling complexity. The great program is subdued, made simple in its complexity.” -p.3

As is the great myth of modern design, complexity is sheathed in simplicity. Less is More. In the same way, a built-in transparency is required of the programmer to make the magic work. I say magic, because that is the effect an executable command elicits when it executes without error - the mystical effects of character strings composed into a function with the result of a change in the environment. The undeniable clause is, however, that it is still code, which only becomes transparent to a select few {sadly I am not part of that club…}. The path of least resistance to the challenge is to investigate existing programs to graft into the script. I started with motion detection.

The first part of the process in creating a public access space with variable play is to determine the working parts. As a physical space was determined, a coordinate system would be required to map out motion detection and velocity of bodies within space. In theory, a Cartesian grid of X,Y, and Z would be ideal, however, only the X and Y variables would be required if overhead nodes could be placed at intervals of 5 feet / 1500mm.

LeapMotion identifies moving objects on a small scale - detecting hand motion for gaming systems akin to Kinect controls - as an effective real-time software tool to enable dexterous feedback from physical input action translated to digital output.

A sample of the code was examined here. Below is a snippet of the process inquiry. Full disclosure: working models were not achieved with LeapMotion, but the concept of gesture controls on a large scale is conceivable with the availability of consumer hardware and hacked code made public.

What is decipherable from the sample above is the foundations of the JS language. A host server is sourced to enact the controls. The controller is simply given a variable name to be plugged in whenever it needs to be recalled. This enables efficient updates to be made from the developer end.

The next item creates the detection refresh rate: motion is captured through a camera device from a triangulated targeting system in space to determine velocity, momentum and positioning, and the refresh rate is variable, from 10fps to 24+fps - adjustable pending application and quality, and hardware limitation.

As in all web hosting pages, a canvas is created, and in this case, the canvas would be the physical parameters of the laneway adjusted to 100ft l x 25ft w. Motion detected from the moving parts / bodies will create a path on the canvas.

Flocking

Introduced to this project as a means to apply audio with sine wave visualization, flocking is an open-source program annotated by Colin Clark, who once described it as “determined by the failure of open-source coding”, that which generated ubiquitous plug-in programs with limited capabilities for expression. In response, flocking is built on JS and JSON {JavaScript Object Notation} without proprietary software or hardware: it runs on your browser {of all sorts}. It is a platform enabler with the output of code function. To better express the mandate of flocking, here is a link to Colin’s declaration. A sample code from flocking:// Create a new synth consisting of a sine wave,

// modulating its amplitude slowly with another sine wave.

var synth = flock.synth({

synthDef: {

id: “carrier”,

ugen: “flock.ugen.sinOsc”,

freq: 440,

mul: {

id: “mod”,

ugen: “flock.ugen.sinOsc”,

freq: 1.0,

mul: 0.25

}

}

});

Ah-ha! moment

Pure tone output is generated from the frequency input, and a visualization of the amplitude / frequency is generated. This model inspired the ideation of creating a sine-wave audio-visualization to be generated with the LeapMotion detector. In short, an individual walking into the space can effectively create a modulation in auditory and visual stimuli, amplified by the layered environment feedback systems in the Victoria Lane way. Thus, when one person entered into the space, their presence would set off a tonal reaction, say a polyphonic synth, amplified by a discrete sound system in the length of the 100ft space. This in turn reacts with a visualization of sine waves in correspondence to the tones and the motion of the subject, moving in accordance with the person in space.

sinewave2.js

var person = {};

var person1 = {

“colour” :”blue”,

“sinWave”: “samples_length1”,

“audio” :”0,2,4”, // audio tones in half second intervals key of C 0=G, 2=B,4=D

};

var person2 = {

“colour” :”red”,

“sinWave”: “samples_length2”,

“audio” :”3,5,7”, // audio tones in half second intervals key of C 0=G, 2=B,4=D

};

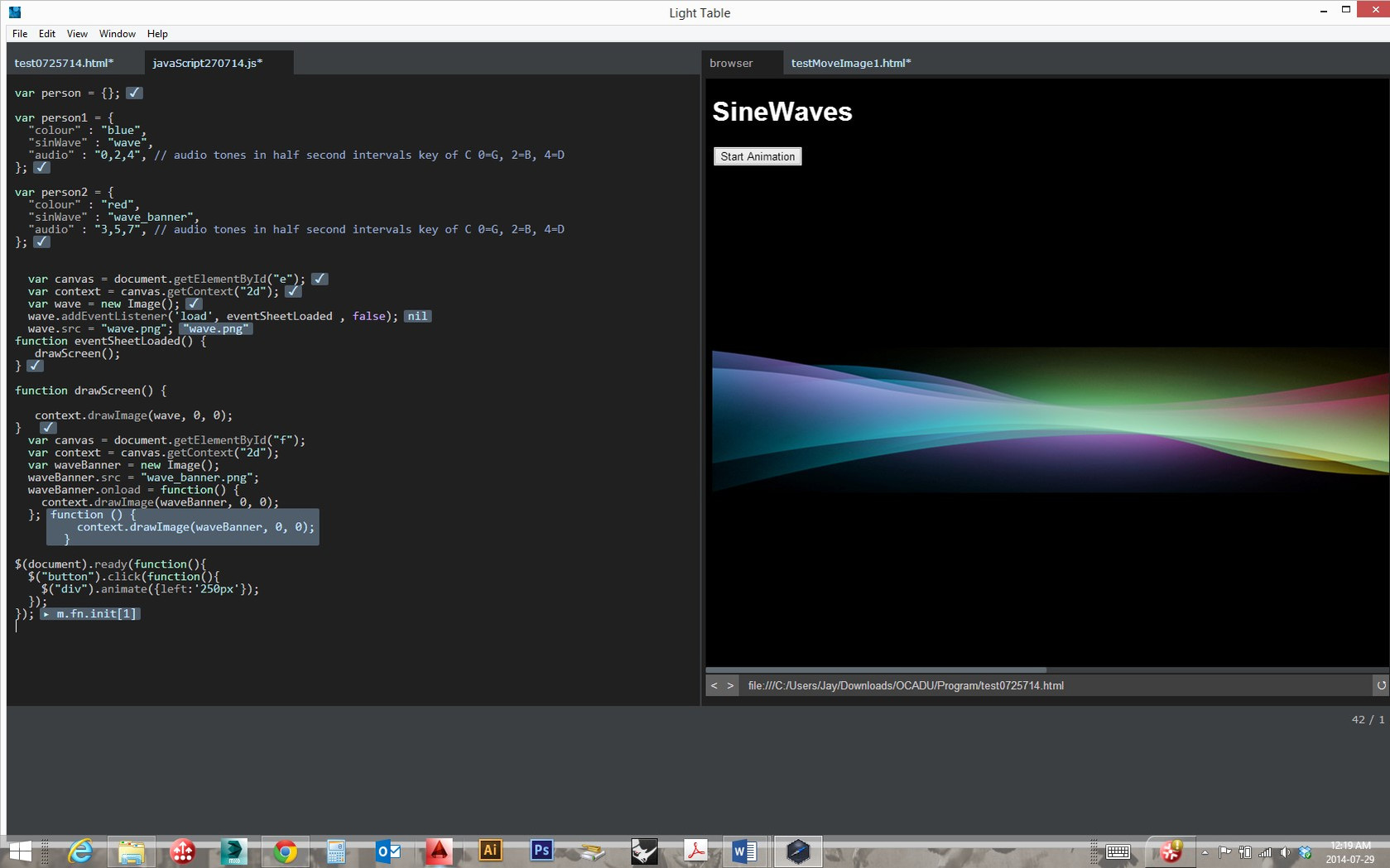

All this does {code above} is identifies an object / subject and assigns a colour and form of an image wave with an audio output in triadic tones. A visualization of the wave form is required for testing in JS - a generic static image {below} is used to simulate an environment.

Subject 2 would be assigned a different set of tones and visual identifier - a second sine wave.

The intent is to create a harmonic reaction when the two subjects enter the canvas / laneway. In the same method of JS, the objective is to create two canvases in HTML, wherein the subject’s audio visual presence is recorded in motion. The canvases overlap with a CSS {Cascading Style Sheet} function {the test wave visuals are PNG files, which enable transparent overlap}, and then incorporate JQuery to animate the files, simulating the motion response.

From there, audio simulation would be added to the effects.

The varying cross-referenced styles of platforms from CSS, JS, Canvas and JQuery are confusing, but when it works, it is extremely satisfying. The abstraction of the coding language is a beautiful efficient expression, but if kept too cryptic or generic, unintelligible. The learning curve of the language has been immense in terms of concept fundamentals, but near-zero in application. I can relate to the abstraction of coding language to 3D software and CAD programs, of which is required a visualization of internal components to develop an understanding of what causes surface developments, and systems of organization. The details of a building, after all, are the foundations of its form.

The coding language has potential to be developed in conjunction with 3D visualization software {Grasshopper, 3D Max, maybe even AutoCAD}. While I may have a grasp of 3D software, I am certainly limited in mathematical JavaScript applications. However, my interests lie not in the perfection of formulaic concepts, but in the generation of the unattainable and the impossible, which includes the break-down of convention, of harmonics. Like electric guitar to amplifier, how does the feedback generate its own entity, its own character? When does the programming stop, and the generation of computational input begin?

To continue, the end goal would be to develop a Sine wave response reacting in real time to sound. To achieve this, an algorithm in relation to a Sine wave formula would need to be devised to visualize a 2 dimensional pattern in the X,Y axes. Add the Z axis, and a dimensional landscape begins to develop with spatial depth.Shortcut {or, the direct line is the path of least resistance}

Due to the complexity of the JavaScript language, I have opted to find alternative means to create a visualization of sound with After Effects. While not familiar with the program, I was intrigued by the simplicity of the output and began to play with the interface. After Effects condenses the JS programming aspiration into a streamlined effect, namely it is an extensive software program loaded with pre-visualization tools and sound applications. In particular, a built-in sound wave application was explored. First, open source sound effects were sourced here

and were then applied to the sound wave visualization application in After Effects

The result was fascinating - a dimensionalized graph in X,Y,Z was developed to respond to the frequencies of the sounds. The Hertz range was adjustable in “layers”, with high and low frequencies isolated. Similar results can be found in the design process of “Deep Planning” in the Studios of Ben van Berkel’s UN Studio. Social and economic relationships are made visible in a grid landscape formation, linking unseen insights and grafting it in contrast to the physical landscape. New formations and relationships have the potential to reveal themselves when the virtual and real formations overlap.

In a project of intimate space relations, Hertzian space - by Mark Taylor - realizes the malleable invisible boundaries projected from bodies, and maps the movements

and in another, real-time surveillance animates a space with coordinates in a project at the top of the Rockefeller center in New York {2006}

Not satisfied with the preliminary Sine wave model, a search led to a plug-in application for a responsive output of sound with a configurable MAYA environment called TrapCode. An example of the same sound effect, applied to the plug-in environment renders an organic animation.

As with a 3 dimensional object in CAD, the user interface enabled orbiting around the object formation, thus the structure could be studied from varying angles. The sample above is a longitudinal elevation {side view}, and below is an overhead perspective view of the same object.

The intention of a dual mode of seeing the responsive feedback of the object is to be able to render multiple surfaces of a physical environment into a seamless wrapped space with immersive effects. A side view is mapped onto the walls of the passage, following subject 1 in real time. A mapped view of the perspective landscape undulates and vibrates with every step, in tandem.

Subject 2 is assigned a different audio identifier and colour:

and again

and here, subject 1 and subject 2 “overlap canvases” with individual responses

And finally both effects translated into one wave form

Such effects are synthesized into a conjoined pattern, yet further exploration could be taken to pursue dynamic reactions of a greater effect {waves generating new forms, organic or sequenced}.

Next:

Synthesis - Part III: Projections, analysis